Your AI Thought Partner: Thinking with Machines

Why great PMs use AI to amplify judgment, not replace it

🎧 Podcast Conversation

In this episode of The Product Leader's Playbook, our AI hosts explore the gap between AI's promise and reality for product teams, unpack the C.O.R.E. framework that transforms scattered prompts into systematic thinking, and discuss why the most successful PMs treat AI as a Socratic partner rather than a magic solution generator.

→ Listen now on Spotify, Apple Podcasts, YouTube, or Amazon Music

"The end of our race is not to be found in technology, but in the perfection of our thinking."

— Josef Pieper

The Promise vs. The Reality

Every product manager claims their job requires clear thinking. Yet most calendars offer little room for actual thought. They overflow with standups, stakeholder reviews, and reactive firefighting. Research accumulates faster than teams can synthesize it. Strategic decisions happen in hallway conversations rather than through systematic analysis.

When generative AI tools arrived, many teams hoped a few prompts would solve the time problem. Early experiments focused on polishing Slack messages and drafting bland release notes. Time saved was minimal. Strategic clarity remained elusive. The technology felt impressive but irrelevant to the hard problems of product leadership.

This represents a fundamental misunderstanding of AI's value proposition for product teams. The opportunity is not speed alone. It is synthesis at scale.

The Current State: Adoption Without Strategy

The enterprise AI adoption numbers tell a revealing story. While 71% of organizations regularly use generative AI in at least one business function, more than 80% report no tangible impact on enterprise-level EBIT from their AI investments. This disconnect between adoption and value creation reflects a pattern familiar to product leaders: confusing activity with impact.

The most sophisticated enterprises are moving beyond basic use cases. Research shows companies are positioning AI for fundamental workflow redesign, with nearly half of technology leaders saying AI is fully integrated into their core business strategy. However, 68% of C-suite executives report that AI adoption has created organizational tension, largely because teams lack shared frameworks for prompt quality and output evaluation.

While appetite for enterprise-wide AI adoption is up 25% compared to 2023, only a third of organizations are prioritizing training or change management for AI tools. This reveals the core problem: teams are deploying powerful technology without systematic approaches to capture its value.

The organizations achieving breakthrough results operate differently. Companies with formal AI strategies report 80% success rates in adoption and implementation, compared to just 37% for teams without strategic frameworks. The difference is not the technology itself but the discipline applied to using it effectively.

Why This Matters More Than Speed

The product management context makes AI particularly valuable because modern product environments have become exponentially more complex. Customer feedback arrives through dozens of channels. Market research spans global segments with different needs. Competitive landscapes shift monthly. Technical constraints change with each sprint.

Research indicates that companies implementing AI thoughtfully achieve 27% higher product success rates, 34% faster decision-making velocity, and 41% better cross-functional alignment compared to teams relying on organic adoption. These improvements compound over time, creating sustainable competitive advantages.

The value emerges not from automating individual tasks but from systematically enhancing the quality of product thinking. Well-directed AI can compress months of research into actionable insights, surface contradictions across roadmaps and market data, and generate competing strategic narratives that teams can pressure-test together.

However, this value materializes only when humans provide rigorous direction and maintain accountability for final decisions.

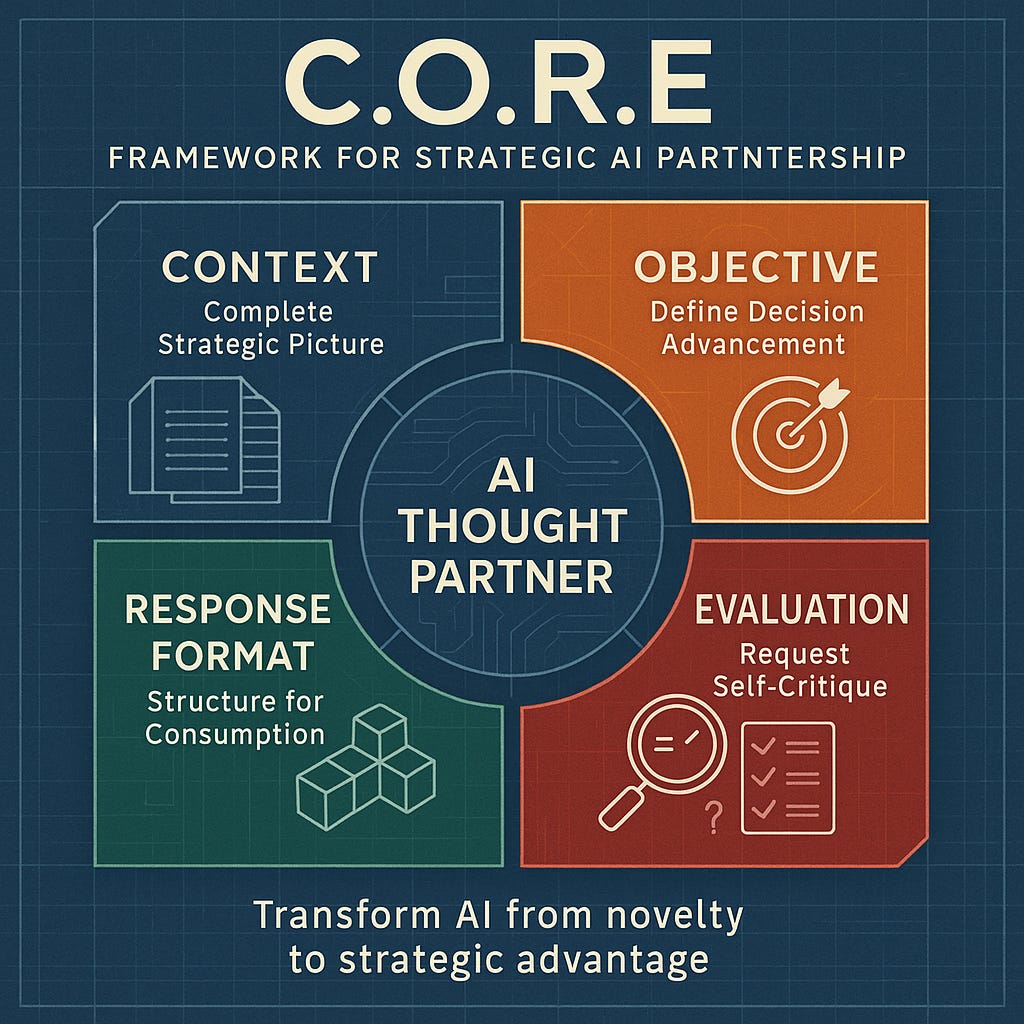

The C.O.R.E. Framework: From Prompts to Partnership

Experience across high-performing product teams reveals four elements that consistently transform AI from a novelty into a strategic asset. The C.O.R.E. framework ensures that every AI interaction advances product decisions rather than generating impressive but irrelevant outputs.

Context: Provide Complete Strategic Picture

AI can only reason with information it receives. Generic prompts produce generic outputs. Effective AI partnership requires comprehensive context about your product, market position, user segments, business constraints, and decision timeline.

Instead of "Help me prioritize features," provide the full strategic context: "Our B2B SaaS platform serves mid-market accounting firms. We're competing primarily on workflow automation rather than core accounting functionality. Our Q3 goal is improving user activation from 23% to 35% within the first week. Here are the eight feature requests from our last user research cycle, along with implementation complexity estimates and customer segment feedback."

The investment in context setup pays dividends across multiple interactions. AI projects can remember the strategic framework and can apply it consistently to new questions without requiring full re-explanation.

Objective: Define Decision Advancement

Every AI interaction should advance a specific decision or debate. Vague objectives produce scattered outputs that require extensive post-processing. Clear objectives focus AI reasoning on the choices you actually need to make.

"Generate product ideas" becomes "Recommend which of these three customer problems deserves our engineering investment next quarter, given our goal of reducing churn in the 50-200 employee customer segment."

"Analyze competitor features" becomes "Assess whether Competitor X's new integration capability threatens our differentiation with accounting firms who use QuickBooks, and recommend our strategic response options."

The objective should specify not just what information you want but how that information will influence upcoming decisions.

Response Format: Structure for Consumption

AI can present identical insights in dozens of formats. The format determines how easily your team can consume and act on the output. Specify exactly how you want information structured based on how it will be used.

"Provide a prioritized list" becomes "Create a table with columns for customer impact, engineering complexity, competitive differentiation, and strategic fit scores. Include your reasoning for each score and flag any assumptions that would benefit from additional user research."

"Draft a strategy memo" becomes "Write a 300-word executive summary followed by three specific recommendation options. For each option, include resource requirements, timeline, success metrics, and main risks. Use bullet points for easy scanning during our leadership review."

Format specification eliminates the iteration cycles typically required to make AI outputs presentation-ready.

Evaluation: Request Self-Critique

The most valuable AI outputs include explicit assessment of their own limitations, assumptions, and confidence levels. This prevents over-reliance on AI reasoning while highlighting areas that require human judgment or additional research.

Every significant AI interaction should conclude with evaluation prompts: "What assumptions underlie this analysis that could be wrong? What additional data would most improve the accuracy of these recommendations? Where is your confidence highest and lowest in this assessment?"

This creates systematic accountability for AI reasoning quality and helps teams identify when human expertise should override AI suggestions.

Practical Implementation: High-Leverage Moments

The C.O.R.E. framework transforms routine product management activities into opportunities for strategic acceleration. Here are four weekly touchpoints where systematic AI partnership creates measurable impact.

Research Synthesis (Monday)

The Challenge: Customer interview transcripts, support tickets, and feedback surveys accumulate faster than teams can extract patterns. Important insights get buried in information overload.

C.O.R.E. Prompt: "Using the twelve user interview transcripts attached, identify the three most frequent pain points mentioned by customers in the manufacturing segment. For each pain point, provide frequency counts, representative verbatim quotes, and correlation with customer tenure. Format as a prioritized table with columns for pain point, frequency, customer quotes, and strategic implications. What contradictions or gaps exist in this feedback that would benefit from follow-up research?"

Impact: Teams report reducing research synthesis time from 4-6 hours to 30 minutes while increasing insight quality through systematic pattern identification.

Hypothesis Development (Tuesday)

The Challenge: Product hypotheses often lack precision or fail to connect to measurable business outcomes. Teams build features based on intuition rather than testable assumptions.

C.O.R.E. Prompt: "Transform this problem statement into three testable hypotheses for our next sprint: 'Customer activation rates drop significantly after the initial setup process.' Consider our user persona (accounting firm managers with limited technical expertise), our metric goal (improve 7-day activation from 23% to 35%), and our development constraints (two-week sprint, one frontend engineer). Structure each hypothesis with assumption, test method, success criteria, and potential pivot options. Where are you least confident in these recommendations?"

Impact: Teams develop more precise hypotheses that connect directly to business metrics and include built-in measurement frameworks.

Scenario Planning (Wednesday)

The Challenge: Strategic decisions involve multiple variables and uncertain outcomes. Teams often consider only obvious alternatives while missing creative options or systematic risk assessment.

C.O.R.E. Prompt: "Given our competitive position against [Company X] in the mid-market accounting software space, evaluate three strategic responses to their new AI-powered reconciliation feature: 1) Build competing AI functionality, 2) Partner with specialized AI providers, 3) Differentiate through workflow integration instead of AI capabilities. Score each option on development timeline, resource requirements, competitive differentiation, and customer retention impact. Include success metrics for each approach and flag the key assumptions that could invalidate your assessment."

Impact: Teams consider broader strategic options and develop systematic criteria for evaluating complex tradeoffs.

Stakeholder Communication (Friday)

The Challenge: Strategic updates often fail to connect product progress with business outcomes. Stakeholders receive information but lack context for decision-making.

C.O.R.E. Prompt: "Convert our Q2 product achievements into three communication formats: 1) A two-sentence executive summary for board slides, 2) A five-bullet engineering brief explaining customer impact, 3) A one-paragraph customer success story for sales enablement. Emphasize our progress toward the 35% activation goal and connect feature releases to business metrics. What potential concerns might each audience have about our current trajectory?"

Impact: Stakeholder alignment improves because communications are tailored to specific audience needs and connected to business outcomes.

Common Failure Modes and Mitigation

Even teams following the C.O.R.E. framework encounter predictable challenges that can undermine AI partnership effectiveness.

Over-Trust in Polished Outputs

AI generates confident-sounding analysis even when operating from incomplete information or flawed assumptions. The polish can mask fundamental reasoning errors.

Mitigation: Establish "devil's advocate" roles in team reviews. Require AI to explicitly state confidence levels and key assumptions. Designate team members to challenge AI recommendations using independent research or domain expertise.

Context Drift Over Time

Strategic context changes as products evolve, markets shift, and team priorities adjust. AI partnerships can become less relevant if context is not systematically updated.

Mitigation: Schedule quarterly context reviews to update AI projects and partnerships with current strategy, user research, competitive landscape, and business constraints. Treat context maintenance as essential infrastructure, not optional overhead.

Hallucination in Specialized Domains

AI can confidently generate incorrect information about technical specifications, regulatory requirements, or industry-specific constraints.

Mitigation: Verify all technical, legal, and regulatory recommendations through human expertise. Use AI for structured thinking and option generation, but require domain expert validation before implementation decisions.

Team Skill Degradation

Over-reliance on AI analysis can atrophy team skills in research synthesis, strategic thinking, and critical evaluation.

Mitigation: Rotate AI usage across team members. Require human-generated analysis alongside AI outputs for major decisions. Use AI to accelerate thinking rather than replace it.

The Competitive Dimension

Organizations implementing systematic AI partnerships are creating sustainable competitive advantages that extend beyond individual productivity gains.

Teams using disciplined AI frameworks consistently outperform others in decision-making velocity, strategic option generation, and cross-functional alignment. Companies like Vizient report achieving four times their estimated ROI from AI investments by treating AI as a systematic capability rather than a collection of individual tools.

The competitive advantage emerges from accumulated learning velocity rather than any single AI application. Teams develop better product intuition through systematic exposure to AI-generated insights, alternative perspectives, and structured reasoning frameworks.

This creates compound returns that are difficult for competitors to replicate through technology adoption alone. The advantage lies in the systematic approach to AI partnership, not in the AI tools themselves.

Building Organizational Leverage

Individual product managers using AI effectively create value for their immediate teams. Organizations implementing systematic AI frameworks create scalable capabilities that compound across products and time periods.

Research shows that companies with AI champions, power users who inspire adoption across departments, achieve significantly higher collaboration rates and organizational impact. These champions help establish shared standards for prompt quality, output evaluation, and decision integration.

The most effective organizations treat AI partnership as a core competency requiring training, standardization, and continuous improvement. They develop internal frameworks for prompt evaluation, output verification, and results measurement.

This organizational approach transforms AI from individual productivity enhancement to systematic competitive advantage.

The Path Forward

AI will not replace product managers, but product managers who master AI partnership will consistently outperform those who do not. The opportunity extends beyond time savings to fundamental improvements in strategic thinking quality and decision-making velocity.

The C.O.R.E. framework provides a systematic starting point, but effectiveness requires practice, iteration, and team commitment to disciplined implementation. Start with high-frequency, low-risk applications. Measure impact on decision quality, not just time savings. Build organizational capability rather than individual dependency.

Most importantly, maintain human accountability for all product decisions. AI is a powerful thought partner, but product leadership requires judgment, empathy, and accountability that remain uniquely human.

The teams winning in competitive markets have moved beyond AI experimentation to systematic AI partnership. They understand that thinking with machines requires more discipline than thinking alone, but the results justify the investment.

Your customers do not care whether you use AI. They care whether you build products that solve their problems better than alternatives. AI partnership, implemented systematically, helps you do that more effectively than competitors who treat AI as novelty rather than strategic capability.

The choice is not whether to use AI. The choice is whether to use it systematically or sporadically. Systematic wins.

🎧 Want to Go Deeper?

This article is discussed in a podcast episode of The Product Leader's Playbook, streaming everywhere:

🔹 Spotify | 🔹 Apple Podcasts | 🔹 YouTube | 🔹 Amazon Music