Metrics That Matter: Stop Measuring What You Shipped

Why Product Teams Need Better Metrics to Drive Real Impact

🎧 Now a Podcast Conversation

This article inspired a new episode of The Product Leader’s Playbook, where our AI hosts examine how product teams fall into the trap of measuring motion instead of momentum. They unpack the illusion of progress, challenge vanity metrics, and walk through the L.E.A.D. Framework as a way to refocus product teams on what actually creates value.

→ 🎙 Listen on Spotify | Listen on Apple Podcasts

“We have lost the concept of truth… and replaced it with statistical success.”

— Alexander Solzhenitsyn

You shipped three new features.

The roadmap is on track.

Sprint velocity looks healthy.

But here’s the real question:

Did anything actually change for the customer?

This article is part of an ongoing series on outcome-focused product strategy. If The Output Trap helped you spot the problem, and Roadmap Rut gave you permission to rethink the plan, this piece offers the tactical model to back up your instincts and sharpen your metrics.

In product management, the temptation to conflate activity with impact is ever-present. When teams are busy, dashboards are green, and release trains are running on time, it can feel like progress is being made. But that feeling can be deceiving. Many of the metrics we rely on such as story points completed, backlog items closed, code deployed, are measures of output, not outcomes.

These metrics can be useful, but only up to a point. They tell us what got done, but not whether it mattered. They offer the comfort of motion but rarely provide the clarity of real momentum. This is the illusion of progress: the belief that because we’re moving fast, we must be heading in the right direction.

To lead effectively, product managers must be able to separate activity from achievement. That means shifting our focus from what we shipped to what shifted in our users’ behavior. And to do that, we need better metrics. We need a framework that grounds our success in real-world outcomes.

That’s where the L.E.A.D. Framework comes in - a practical, behavior-first model designed to help product teams track meaningful change, not just mechanical delivery.

The Illusion of Progress

We operate in environments filled with dashboards and reporting tools. They show us story point velocity, epic completion percentages, and on-time delivery charts. These tools promise visibility and performance insights, yet too often they fail to answer the most important question: Are we actually making an impact?

Backlog burn-downs can tell us how quickly a team is executing, but they say nothing about the quality or utility of the features being released. Release frequency may suggest a well-oiled team, but it does not reveal whether users found value in those releases. And too often, we equate that kind of technical progress with product success.

“Most teams don’t suffer from a lack of data. They suffer from a lack of insight.”

This is not to say that output metrics are irrelevant. They serve a valuable internal function, especially when assessing delivery consistency, process efficiency, or resourcing needs. But they are not a substitute for impact. Output tells you how fast the machine is running. Outcome tells you whether it's going in the right direction.

And without real behavioral feedback, there is no learning. No refinement. Just a parade of completed tickets that tell us little about what users need or how they respond.

To break free from this cycle, we must stop asking whether we shipped something and start asking whether it changed anything.

But First, Build the Foundation

Before you can track the metrics that truly matter, you need the infrastructure to make them observable. Outcome-driven measurement isn’t just a mindset shift, it’s a technical one.

If your data only tells you what was deployed, you’ll never know what was discovered, adopted, or acted upon.

To support frameworks like L.E.A.D., teams must invest in a basic data and reporting stack that includes:

Event Tracking – Identify and capture meaningful user actions across your product

Data Collection – Use SDKs, JS snippets, and integrations to log those actions

Data Storage – Store raw and structured data in reliable systems

Data Processing – Clean and transform data to extract insight

Data Visualization – Make behavioral signals visible through dashboards and charts

Reporting Tools – Create ways to analyze, compare, and present outcomes over time

In many cases, this also involves embracing event-driven architectures - using triggers, webhooks, and message queues that allow for real-time insight and scalable behavior tracking.

Recommended Tools (by category):

· Event Tracking & Analytics: Mixpanel, Amplitude, Heap

· Data Collection & Routing: Segment, RudderStack

· Storage & Processing: Snowflake, BigQuery, dbt

· Visualization & Reporting: Looker, Metabase, Mode

You can’t manage what you can’t measure. And you can’t measure what you can’t see.

Before L.E.A.D. becomes a reality, you have to lay the groundwork.

The L.E.A.D. Framework

Track behavior, not just activity.

When the goal is to create value for users, success must be measured in behavioral terms. Did customers act differently? Did they engage in new ways? Did they accomplish something they couldn’t before?

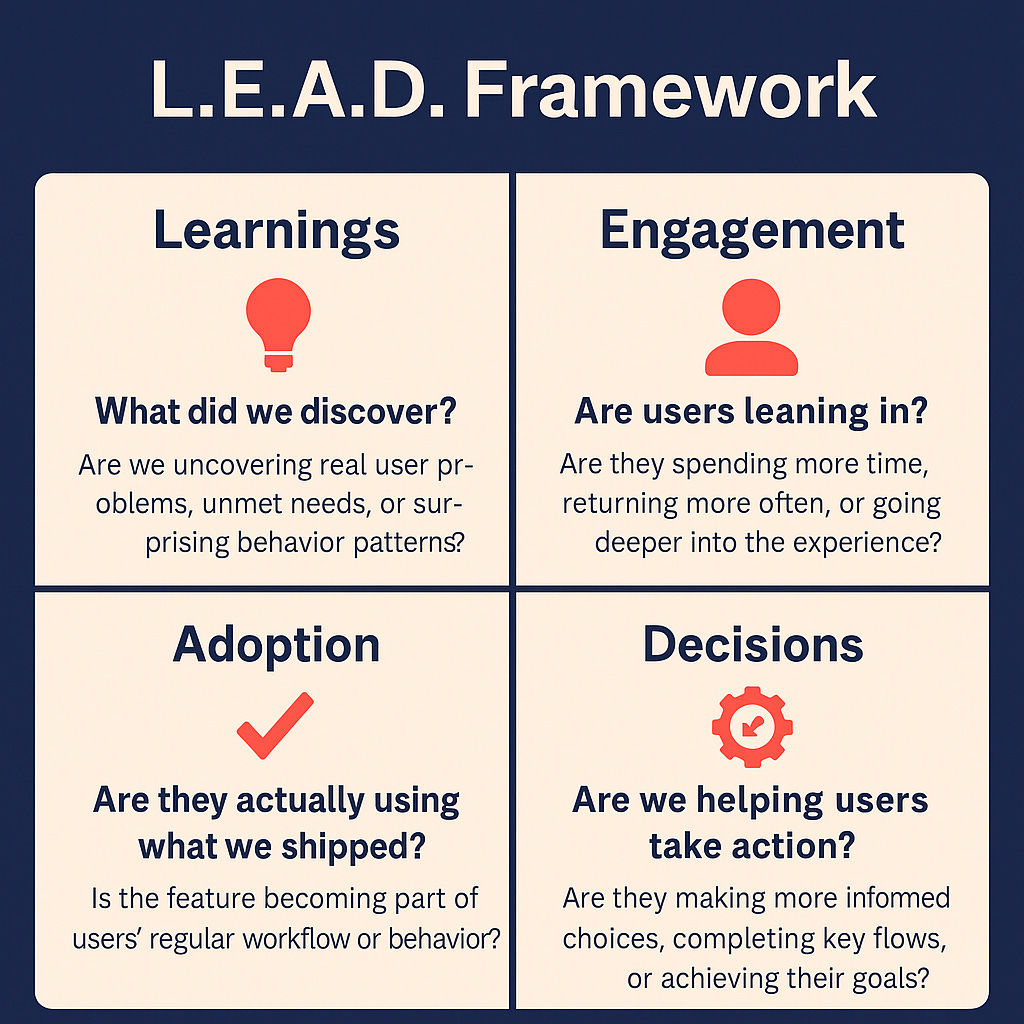

The L.E.A.D. Framework is a tool to help product managers structure that evaluation. It organizes meaningful metrics into four behavior-first categories:

L – Learnings

Are we uncovering new insights?

Have we validated the problem this feature solves?

What qualitative or quantitative inputs have we gained from user interaction?

Example metric: Percentage of features informed by validated user problems

E – Engagement

Are users meaningfully interacting with what we built?

Are sessions longer, deeper, or more frequent?

Do users return to the product or flow we’ve updated?

Example metric: Time-to-key-action, return visits, session depth

A – Adoption

Are new features becoming part of user behavior?

Are users integrating this feature into their regular workflow?

Is usage sustained beyond the initial launch spike?

Example metric: Active user percentage by cohort, long-term retention of new capabilities

D – Decisions

Are we enabling better outcomes for the user?

Is the product helping users complete key tasks or make smarter choices?

Are users progressing toward goals or triggering valuable events?

Example metric: Task success rate, conversion through key flows, decision-making indicators

“Shipping is a milestone. Adoption is a metric.”

These four lenses, learnings, engagement, adoption, and decisions, allow product leaders to interpret success through the lens of user behavior. Not every feature will need to track all four, but each release should be able to answer at least one: How does this move the needle?

When to Use L.E.A.D.

This framework is most useful when you’re:

Planning or launching new features

Preparing for OKR or quarterly roadmap reviews

Evaluating past bets and feature ROI

Arguing for or against investments in roadmap prioritization meetings

Building alignment across product, design, data, and engineering teams

If you're ever asked, "How will we know this worked?" - L.E.A.D. is a powerful place to start.

Applying L.E.A.D. in Practice

Make behavior the basis for every product bet.

One of the strengths of the L.E.A.D. Framework is its versatility. Whether you’re launching a major new feature, running an early experiment, or refining an existing flow, this model gives you a way to focus on what matters.

Let’s walk through a few practical examples:

E-commerce Experience

Feature: Personalized product recommendations

Adoption: Percentage of users who click on recommendations

Engagement: Average recommendations viewed per session

Decision: Conversion rate from recommendation to purchase

Why it matters: You’re not tracking whether the feature was built, you’re measuring whether it influences buying behavior.

Mobile App Redesign

Feature: Streamlined onboarding experience

Learnings: Drop-off analysis reveals points of user confusion

Adoption: Percentage of users completing onboarding

Engagement: Time to first meaningful action after onboarding

Why it matters: Good onboarding is invisible. Success is measured not in UI aesthetics, but in seamless activation.

B2B SaaS Dashboard

Feature: Advanced reporting module

Learnings: User interviews reveal confusion with existing reports

Adoption: Weekly active users on the new dashboard

Decision: Percentage of users who base key decisions on new data

Why it matters: This isn’t about more charts, it’s about helping users make more informed decisions.

Product Prioritization Tie-In

The L.E.A.D. Framework also helps you speak the language of outcomes during planning sessions. When debating roadmap priorities, this model arms you with behavioral rationale, not just project status updates or general goals.

Instead of saying:

“This supports our Q3 OKRs.”

You can say:

“We’ve seen declining adoption in this flow, and this initiative is designed to reverse that trend.”

“This test helps us generate new insights in an area we currently don’t understand well.”

“This unlocks a high-leverage behavior that supports both engagement and revenue.”

This elevates the conversation. It shifts discussion away from internal pressures and into the realm of external impact, where real product strategy belongs.

Reinforcing the Shift

From outputs to outcomes. From delivery to impact.

The L.E.A.D. Framework is more than a reporting method. It is a change in mindset. It positions product leadership not as the efficient delivery of features, but as the careful cultivation of behavior that drives value.

That means:

Prioritizing learning before launching

Tracking meaningful engagement instead of shallow usage

Measuring adoption as a proxy for relevance

Designing for decision-making, not just navigation

Because at the end of the day, it is not what you shipped that matters, it is what stuck.

💬 Let’s Discuss

How are you measuring success right now?

What metrics have helped you prove impact—not just activity?

Where do you still feel stuck tracking motion instead of momentum?

Which part of the L.E.A.D. Framework feels most useful in your role?

I’d love to hear how you're navigating the shift from outputs to outcomes in your own product work.

🎧 Want to Go Deeper?

This article is discussed in a podcast episode of The Product Leader’s Playbook, streaming everywhere:

🔹 Spotify | 🔹 Apple Podcasts | 🔹 YouTube | 🔹 Amazon Music